csvbase is a simple website for sharing table data. Join the discord.

DuckDB isn't just fast

A whistlestop tour of the cool bits of DuckDB

2024-05-30

by Cal Paterson

DuckDB is a single file SQL database. It's designed for data analysis and so, probably because of the bent of people who are into that sort of thing, a lot of the evaluations of it end up being quantitative. This isn't just true of DuckDB - most comparisons of most data tools tend to focus on the measureable.

That means they mainly look at speed. And DuckDB generally does well.

The notes on benchmark performance graphs often read "higher is better" and performance improvements are even called "optimisations". But the truth is, at least as a user, once performance reaches a satisfactory level - enough for your own data analysis to complete in a reasonable about of time - there is no further benefit from increased speed. Instead of being called "performance optimisation" it should probably be called "performance satisfaction" as once it is satisfactory you have finished.

Usability is different. The whole point of computers is as an aid to productivity so user-friendliness is actually the bit you want to optimise. Unlike speed, being easier to use is always better and there is very little limit to that. So it's "usability improvements" that should be called "optimisations" but perhaps the relevant ships on all of these terms have sailed.

Anyway to balance out the force out I want to demonstrate some usability benefits of DuckDB. Mostly, they cannot be measured:

- Good developer ergonomics

- It handles larger than memory ("out of core") datasets

- Easy to install & run

Ergonomics

DuckDB takes care to make the common stuff straightward. For example, you can create tables (including inferring the table schema) straight from input files:

-- loading a table from a parquet file

CREATE TABLE stock_exchanges AS

FROM

read_parquet(

"https://csvbase.com/meripaterson/stock-exchanges.parquet"

);

Looking at the schema of that table:

-- the output of: .schema stock_exchanges

CREATE TABLE stock_exchanges (

csvbase_row_id bigint,

Continent varchar,

Country varchar,

"Name" varchar,

MIC varchar,

"Last changed" date

);

DuckDB has inferred all the columns, including their types, from the Parquet file. Brill. And as you can see, that Parquet file can come from anywhere on the web, it need not be local. That's perhaps not a big advance on some of the common dataframe libraries, but it is a big advance in the world of SQL-based tools, most of which can only read CSV (not Parquet) and then also require the schema to be created beforehand.

And you don't actually have to create a table first in order to query the data.

The read_parquet function returns a relation and so can act as a sub-query.

A specific example of that, this time with a csv file:

-- pulling down the most recent EUR:USD exchange rate

SELECT

rate

FROM

read_csv_auto("https://csvbase.com/table-munger/eurofxref.csv")

WHERE

currency = 'USD';

So you can freely query parquet and csv files on the web with the minimum of fuss.

But how much of SQL does DuckDB support? A very wide swathe. I haven't done any comprehensive analysis but of the stuff I use in Postgres I haven't found much if anything that isn't also implemented in DuckDB.

For example, window functions are fully supported:

-- smoothed history of the eur:usd exchange rate

SELECT

date,

avg(rate) OVER (

ORDER BY date

ROWS BETWEEN 100 PRECEDING AND CURRENT ROW

) AS rolling

FROM

read_parquet('https://csvbase.com/table-munger/eurofxref-hist.parquet')

WHERE

currency = 'USD';

And that's not the end of DuckDB making the simple stuff easy. I did the above query at the library on a slow internet connection and DuckDB helpfully started to display a progress bar, which even Postgres doesn't have.

Then, when the query was done it politely avoided swamping my terminal with the 6500 lines of output by abbreviating them, just like Pandas does.

Datasets larger than memory

One of the problems that arises with more than a few data tools is that once the dataset gets bigger than the computer memory (or gets within 50%) the tool breaks down.

This is an underrated source of pain. Sometimes I've seen someone write something quickly with one tool as a quick prototype. The prototype works great and you want to run it on the full dataset - but wait - you can't. You're getting memory errors, heavy swapping, etc. The problem is that the tool was loading the whole dataset into memory and so suddenly you have to change technology. Always an unpleasant discovery.

DuckDB fully supports datasets larger than memory. That's in contrast to Pandas, which starts to struggle once your dataframe is >50% of system memory. The majority of dataframe libraries do not support datasets larger than memory or require alternate, more limited, modes of operation when using them - but in DuckDB everything works.

Single file, single machine model - and the magic of WASM

DuckDB (which, like csvbase, is an

open source project) gets compiled to a

single executable file, duckdb. That means trying it out just means copying

duckdb onto your computer and running it. Find the right executable for your

machine here.

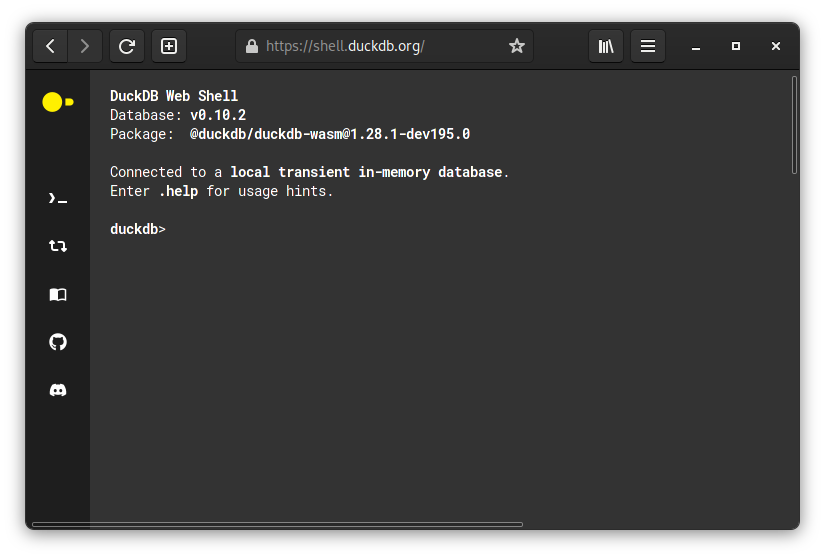

But it actually gets even easier than that. Through the magic of WASM you can experience the full majesty of DuckDB directly in your browser on shell.duckdb.org!

shell.duckdb.org is an based on the WASM (aka

WebAssembly) target of the duckdb

build. WASM is a newish binary format that allows you to run native code

(think: .exe files) inside a web browser. It's not quite as fast as real

native code, but

it's usually close enough and has the key advantage that you can execute random

binaries in a sandboxed virtual machine - mostly without rewriting them.

As a result shell.duckdb.org is fully powered - it can be because everything

is running in your browser, not on a server. You can use shell.duckdb.org to

import files off the web, you have the full SQL dialect, you can execute

long-running queries, whatever you want. And you can even share sessions as

links. Try this one:

DuckDB as a lo-fi dataframe library

DuckDB also has good quality integration with the lingua franca of data analysis. For better or worse that means: Python.

First, install the DuckDB python library (and csvbase's client, which I will use later).

pip install duckdb csvbase-client

Now you can execute queries inside Python:

import duckdb

duckdb.sql("select 1")

Easy enough. But there is one more trick: you can query return values.

That means you you can start to do imperative-style programming to build up a bigger data operation step by step - in an analogous way to how you would write dataframe code. A worked example:

# get all stock exchanges

stock_exchanges = duckdb.sql('''

SELECT

*

FROM

read_parquet(

"https://csvbase.com/meripaterson/stock-exchanges.parquet"

)

''')

# exclude non-North American exchanges

na_stock_exchanges = duckdb.sql("""

SELECT

*

FROM

stock_exchanges -- a variable reference to the above

WHERE

"Continent" = 'North America'

""")

# get the MIC codes as a Python set

na_mic_codes = {

t[0] for t in duckdb.sql("""

SELECT

"MIC"

FROM

na_stock_exchanges

""").fetchall() if t is not None

}

Allowing for dataframe-style programming starts to bridge the benefits of SQL with the benefits of dataframes. You get all the benefits of SQL:

- Lazy evaluation

- The query planner (ie: an optimising compiler)

- That SQL is already very well known

And then you also get the benefits of dataframes

-

Easy to write larger programs by doing larger operations step-by-step

- without resorting to string concat tricks or sprocs

-

Can use an imperative language to build up your data operation

- and wrap it

That's not to say that this is a fully developed replacement for Pandas. Pandas' API still does a lot more than just this. But being able to build up larger programs using the dataframe-style of programming certainly makes them easier to write.

Saving your game with csvbase (fsspec)

I wrote before about how csvbase's client library is designed to slot in to anything by being written against a standard API called "fsspec". I gave Pandas, Polars and Dask as examples but the same is true for DuckDB:

import duckdb, fsspec

# you'd put this bit into __init__.py

duckdb.register_filesystem(fsspec.filesystem('csvbase'))

duckdb.sql("""

COPY stock_exchanges TO

'csvbase://calpaterson/duckdb-example?public=true' (HEADER, DELIMITER ',')

""")

And it's not just csvbase that implements fsspec but plenty of others like Google Drive, SFTP, HFDS - there are lots and lots of implementations. Find a list of them this way:

from fsspec.registry import known_implementations; import pprint

pprint.pprint(known_implementations)

The majority of Python-based data libraries have support for fsspec so this is nothing particularly special - but it's just nice to know that DuckDB can easily plug into anything that already has an fsspec implementation.

Scale - "lower is better"

After speed, the next much-discussed quantitative dimension is "scalability". Scale is probably an even more vexed topic than speed because while more speed is not always better it at least does no harm. Greater scale though, usually comes with greater complexity.

DuckDB does not scale to thousands of machines. Apache Spark does though, and is now the established "big tech company" way to do larger data analyses. But the hassle involved in Spark is actually considerable. Even the cloud services that take a huge bite out of your team's monthly budget don't really make all of the hassle go away.

In my view, scale is not just a one-way road. Scaling down is just as important - perhaps more - than scaling up. Down, down, down - to a single person trying to get stuff done, not an uncommon scale in the field of data analysis.

DuckDB operates on this scale and it requires very little of you. If you're doing data analysis you probably already know SQL. DuckDB supports larger-than-memory data. And there isn't a lot to install. That makes it a highly desirable alternative to full blown Spark code for many many cases.

See also

csvbase now supports tables backed by git repos. It's a nice way to get both easy reads and writes as well as change history of git. You can also use it to publish csv files from repos onto the web (including private repos).

As I mentioned, csvbase-client works with

DuckDB via the magic of

fsspec. That includes the

cache - so repeated references to a table don't

pointlessly re-download it each time.

I'm pretty interested in WASM. Perhaps it would be possible to allow people to upload their data cleanup/transformation scripts as wasm binaries and run them on csvbase each time an upstream dataset changed? Write to me if you're interested in this.

DuckDB seems to have come out of the Netherland's national computer science and maths institute, CWI. Many other columar databases have links with CWI, including MonetDB as well as Snowflake. Clearly there is something in the water at CWI.

DuckDB is obviously influenced by SQLite. What's the difference? SQLite uses a more traditional "row store" storage system which is ideal for transaction-heavy workloads but less amenable to data analysis workloads then the columnar form of DuckDB.